基于 Containerd 最小化搭建 Kubernetes 1.28.0 版本集群

主要操作步骤:

1、初始化 OS

2、对OS进行集群配置初始化

3、确定 部署机器与待安装机器

4、安装并配置 Containerd

5、安装 Kubernetes工具集

6、初始化全部的 master节点

7、安装 Kubernetes网络插件

8、将slave节点加入到集群中

目标主机规格:

CPU: 2C4T

Mem:2GB

HDD:20GB

OS:CentOS 7-2207_X86_x64

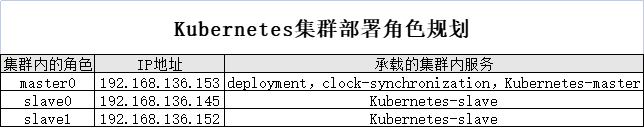

Kubernetes集群部署角色规划

master0 192.168.136.153 deployment,clock-synchronization,Kubernetes-master

slave0 192.168.136.145 Kubernetes-slave

slave1 192.168.136.152 Kubernetes-slave

登录 deployment 角色主机 后主要操作过程

Step 1 配置SSH授权登录

ssh-keygen -t rsa -P ""

cp $HOME/.ssh/id_rsa.pub $HOME/.ssh/authorized_keys

for i in {192.168.136.153,192.168.136.145,192.168.136.152};do scp -r $HOME/.ssh root@$i:$HOME;done

Step 2 配置 Resolve 集群内的主机地址解析

/etc/hosts

tee -a /etc/hosts <<-'EOF'

192.168.136.153 master0

192.168.136.145 slave0

192.168.136.152 slave1

EOF

for i in {192.168.136.145,192.168.136.152};do scp /etc/hosts root@$i:/etc/;done

Step 3 配置主机静态主机名

cat /etc/hosts | while read hang;do server=echo ${hang} | awk -F " " '{print $1}' && name=echo ${hang} | awk -F " " '{print $2}' && ssh -n root@${server} "hostnamectl set-hostname ${name}";done

Step 4 检查集群内主机的 SElinux、 swap、 OS 防火墙状态

for i in {192.168.136.153,192.168.136.145,192.168.136.152}; do ssh root@$i "echo -e ' ';echo -e ' 主 机 IP 信 息 ';ip a | grep -E -w'inet.*ens33';echo -e ' ';echo -e 'SELinux 状态';sestatus;echo -e '';echo -e 'OS 防 火墙状态'systemctl status firewalld;echo -e ' ';echo -e'OS 的 SWAP 状态';swapon --show;echo -e ' '" ; done

Step 5 使用 chrony 配置集群内的时钟同步

服务端配置

server 192.168.136.153 iburst

driftfile /var/lib/chrony/drift

makestep 1.0 3

rtcsync

hwtimestamp *

minsources 2

allow 0.0.0.0/0

local stratum 10

keyfile /etc/chrony.keys

logdir /var/log/chrony

客户端配置

server 192.168.136.153 iburst

driftfile /var/lib/chrony/drift

makestep 1.0 3

rtcsync

keyfile /etc/chrony.keys

logdir /var/log/chrony

Step 6 安装配置Containerd

for i in {192.168.136.153,192.168.136.145,192.168.136.152}; do ssh root@$i "wget -O /etc/yum.repos.d/docker-ce.repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo" ;done

for i in {192.168.136.153,192.168.136.145,192.168.136.152}; do ssh root@$i "yum install -y containerd" ; done

containerd config default > /etc/containerd/config.toml

for i in {192.168.136.145,192.168.136.152}; do scp /etc/containerd/config.toml root@$i:/etc/containerd/ ; done

for i in {192.168.136.153,192.168.136.145,192.168.136.152}; do ssh root@$i "systemctl

start containerd && systemctl enable containerd" ; done

注意:请合理调整 配置文件 /etc/containerd/config.toml ,至少需要调整的是 sandbox_image

Step 7 安装 kubernetes 社区提供的 containerd 客户端工具 crictl

for i in {192.168.136.153,192.168.136.145,192.168.136.152}; do scp ./crictl-v1.28.0-linux-amd64.tar.gz root@$i:/opt/;sleep 3;ssh root@$i "cd /opt/ && tar -xzf crictl-v1.28.0-linux-amd64.tar.gz -C /usr/bin/" ; done

[root@master0 centos7-00]# cat > crictl.yaml <<-'EOF'

runtime-endpoint: unix:///run/containerd/containerd.sock

image-endpoint: unix:///run/containerd/containerd.sock

timeout: 10

EOF

[root@master0 centos7-00]#

for i in {192.168.136.153,192.168.136.145,192.168.136.152}; do scp ./crictl.yaml root@$i:/etc/; done

注意:这个客户端工具的版本号需要和Kubernetes版本号一致

Step 8 安装 Kubernetes v1.28.0 的工具集 kubeadm,kubelet,kubectl

[root@master0 centos7-00]# cat < kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

for i in {192.168.136.153,192.168.136.145,192.168.136.152}; do scp ./kubernetes.repo root@$i:/etc/yum.repos.d/ ; done

for i in {192.168.136.153,192.168.136.145,192.168.136.152}; do ssh root@$i "yum install -y {kubeadm,kubelet,kubectl}-1.28.0-0" ; done

[root@master0 centos7-00]# cat > kubelet < KUBELET_EXTRA_ARGS=--cgroup-driver=systemd

EOF

[root@master0 centos7-00]#

for i in {192.168.136.153,192.168.136.145,192.168.136.152}; do ssh root@$i "systemctl start kubelet && systemctl enable kubelet && systemctl is-active kubelet" ; done

Step 9 初始化 全部的 master

kubeadm config print init-defaults | tee kubernetes-init.yaml

kubeadm init --config ./kubernetes-init.yaml

export KUBECONFIG=/etc/kubernetes/admin.conf

注意:需要按需调整初始化文件 kubernetes-init.yaml

Step 10 把 全部的slave加入到初始化好后的集群中

for i in {192.168.136.145,192.168.136.152}; do ssh root@$i "kubeadm join 192.168.136.153:6443 --token abcdef.0123456789abcdef --discovery-token-ca-cert-hash sha256:24421351ee9325ac028036b089aa62f01c7e39ec385135c22e113f657b0d78ba --cri-socket=unix:///var/run/containerd/containerd.sock" ; done

Step 11 安装CNI网络插件

wget https://github.com/flannel-io/flannel/releases/latest/download/kubeflannel.yml

kubectl apply -f /home/centos7-00/kube-flannel.yml

注意:此处选用了 flannel 插件,可以根据实际的网络部署规划选用其他的 CNI 插件

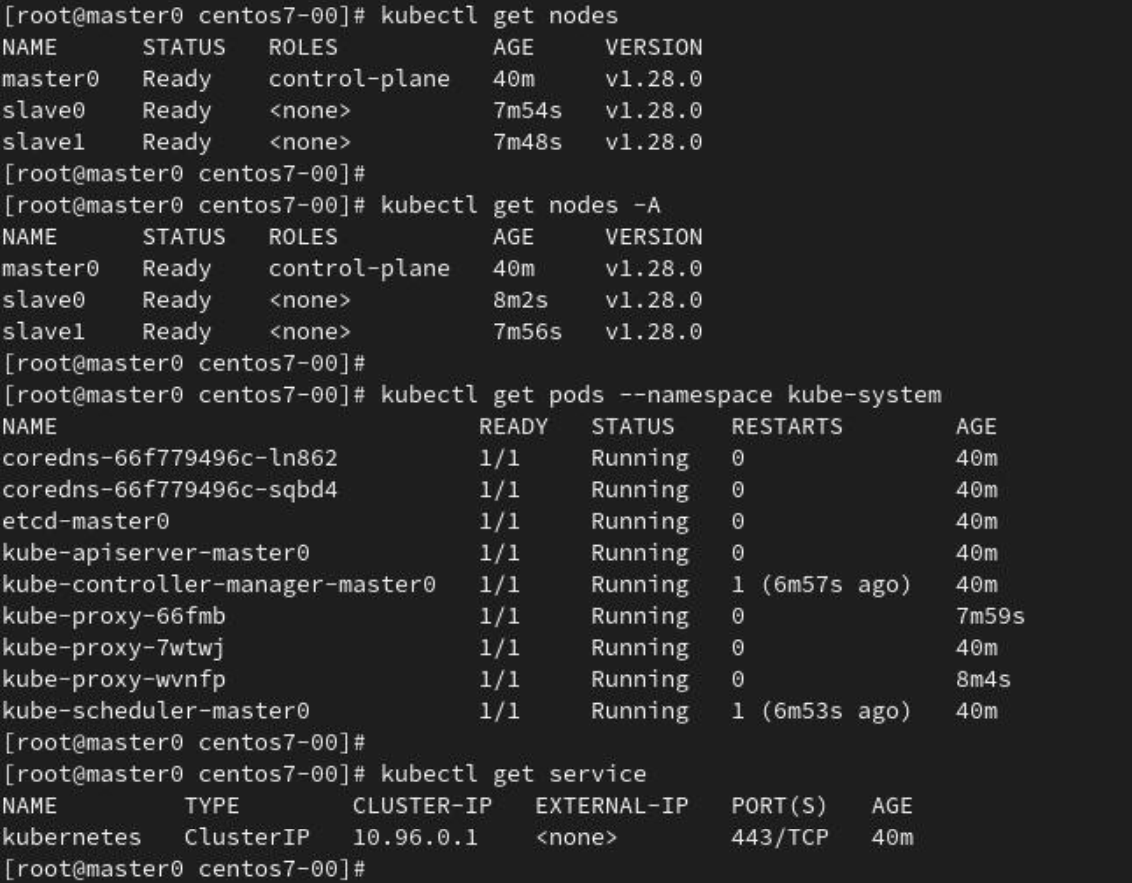

Step 12 查看集群状态

Step 13 平稳关闭 Kubernetes 集群

for i in {192.168.136.152,192.168.136.145,192.168.136.153};do ssh root@$i "shutdown -h 1";done

如果觉得我的文章对您有用,请点赞。您的支持将鼓励我继续创作!

赞2作者其他文章

评论 0 · 赞 4

评论 0 · 赞 5

评论 0 · 赞 0

评论 0 · 赞 6

评论 0 · 赞 4

添加新评论0 条评论