Vmware esxi虚拟机下安装配置HADOOP

1.2 虚拟机规划

集群中包括3个节点,1个master,2个Salve,节点之间局域网连接,可以相互ping通,节点IP地址分布如下

|

机器名称 |

IP地址 |

|

Master |

10.100.100.178 |

|

Datanode1 |

10.100.100.179 |

|

Datanode2 |

10.100.100.180 |

三个节点上均是redhat linux x86-64系统,并且有一个相同的用户grid。Master机器主要配置NameNode和JobTracker的角色,负责总管分布式数据和分解任务的执行;2个Salve机器配置DataNode和TaskTracker的角色,负责分布式数据存储以及任务的执行。

单个节点虚拟机配置信息如下

内存:1G,硬盘:20G,操作系统:redhat linux 5.4 x86-64

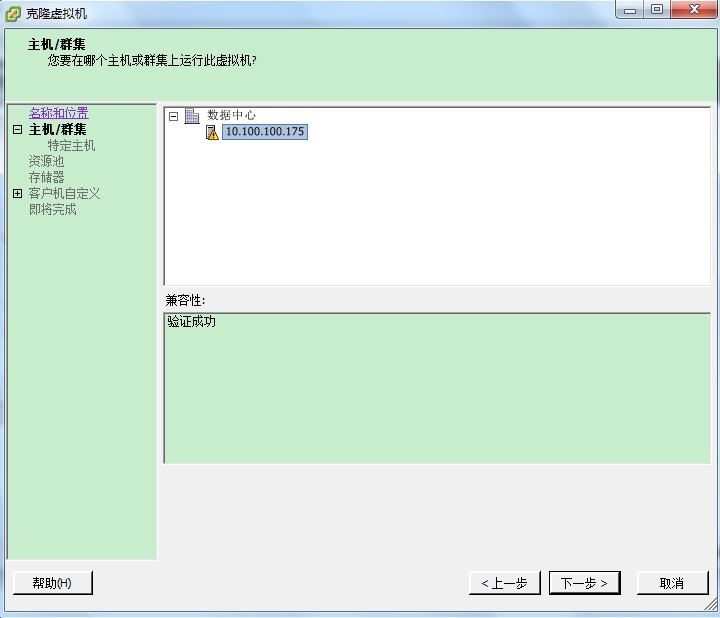

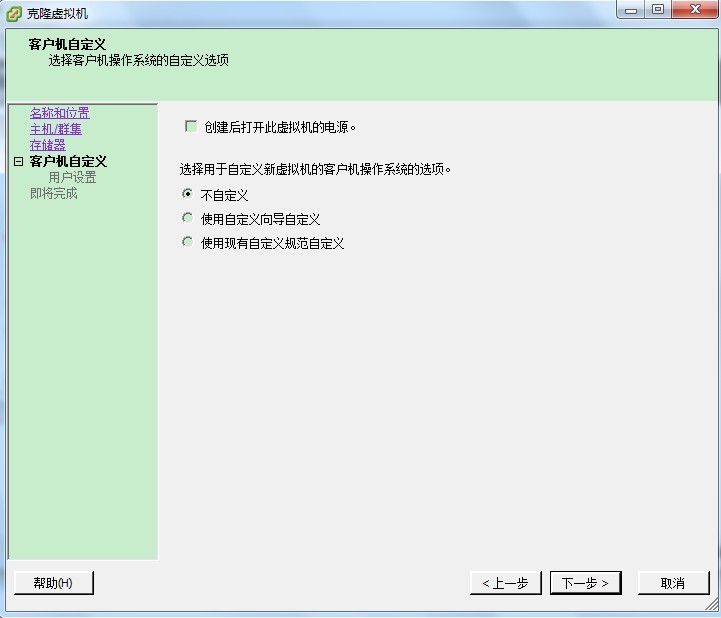

虚拟机安装过程省略,先安装一台虚拟机,安装好java等公共信息后再进行复制

2 安装JAVA JDK

先从官网下载jdk 1.6版本,然后上传到虚拟机的/soft目录,以下为安装配置过程

2.1 安装

[root@localhost init.d]# cd /soft

[root@localhost soft]# ls

jdk-6u35-linux-x64-rpm.bin

[root@localhost soft]# ls -l

total 67296

-rw-r----- 1 root root 68834864 Oct 9 17:40 jdk-6u35-linux-x64-rpm.bin

[root@localhost soft]# chmod +x jdk-6u35-linux-x64-rpm.bin

[root@localhost soft]# ./jdk-6u35-linux-x64-rpm.bin

Unpacking...

Checksumming...

Extracting...

UnZipSFX 5.50 of 17 February 2002, by Info-ZIP (Zip-Bugs@lists.wku.edu).

inflating: jdk-6u35-linux-amd64.rpm

inflating: sun-javadb-common-10.6.2-1.1.i386.rpm

inflating: sun-javadb-core-10.6.2-1.1.i386.rpm

inflating: sun-javadb-client-10.6.2-1.1.i386.rpm

inflating: sun-javadb-demo-10.6.2-1.1.i386.rpm

inflating: sun-javadb-docs-10.6.2-1.1.i386.rpm

inflating: sun-javadb-javadoc-10.6.2-1.1.i386.rpm

Preparing... ########################################### [100%]

1:jdk ########################################### [100%]

Unpacking JAR files...

rt.jar...

jsse.jar...

charsets.jar...

tools.jar...

localedata.jar...

plugin.jar...

javaws.jar...

deploy.jar...

Installing JavaDB

Preparing... ########################################### [100%]

1:sun-javadb-common ########################################### [ 17%]

2:sun-javadb-core ########################################### [ 33%]

3:sun-javadb-client ########################################### [ 50%]

4:sun-javadb-demo ########################################### [ 67%]

5:sun-javadb-docs ########################################### [ 83%]

6:sun-javadb-javadoc ########################################### [100%]

Java(TM) SE Development Kit 6 successfully installed.

Product Registration is FREE and includes many benefits:

* Notification of new versions, patches, and updates

* Special offers on Oracle products, services and training

* Access to early releases and documentation

Product and system data will be collected. If your configuration

supports a browser, the JDK Product Registration form will

be presented. If you do not register, none of this information

will be saved. You may also register your JDK later by

opening the register.html file (located in the JDK installation

directory) in a browser.

For more information on what data Registration collects and

how it is managed and used, see:

http://java.sun.com/javase/registration/JDKRegistrationPrivacy.html

Press Enter to continue.....

Done.

2.2 配置环境变量

[root@localhost soft]# cd /usr

[root@localhost usr]# cd java

[root@localhost java]# ls

default jdk1.6.0_35 latest

[root@localhost java]# cd jdk1.6.0_35/

[root@localhost jdk1.6.0_35]# ls

bin COPYRIGHT include jre lib LICENSE man README.html register.html register_ja.html register_zh_CN.html src.zip THIRDPARTYLICENSEREADME.txt

[root@localhost jdk1.6.0_35]# vi /etc/profile

添加以下环境变量

export JAVA_HOME=/usr/java/jdk1.6.0_35

export JAVA_BIN=/usr/java/jdk1.6.0_35/bin

export PATH=$JAVA_HOME/bin:$PATH

export CLASSPATH=.:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar

export JAVA_HOME JAVA_BIN PATH CLASSPATH

注意:path最好还是把jdk路径放在最前面,因为我安装操作系统时选了java环境,我之前把jdk路径放到了path的最后,结果总显示java版本是1.4.2,可以用which java查看当前使用的jdk路径

使环境变量生效后,再来确认一下jdk版本信息

[root@localhost ~]# which java

/usr/java/jdk1.6.0_35/bin/java

[root@localhost ~]# java -version

java version "1.6.0_35"

Java(TM) SE Runtime Environment (build 1.6.0_35-b10)

Java HotSpot(TM) 64-Bit Server VM (build 20.10-b01, mixed mode)

新增运行的hadoop用户,并配置密码。

添加hadoop用户grid

[root@Master ~]# adduser grid

修改密码

[root@Master ~]# passwd grid

Changing password for user grid.

New UNIX password:

BAD PASSWORD: it is too short

Retype new UNIX password:

passwd: all authentication tokens updated successfully.

[root@Master ~]#

将grid添加到管理员组

[root@Master ~]#gpasswd -a grid root

4.2 配置虚拟机节点信息 4.2.1 设置主机名

[root@Master ~]# vi /etc/sysconfig/network

NETWORKING=yes

NETWORKING_IPV6=yes

HOSTNAME=Master

GATEWAY=10.100.100.1

[root@Master ~]#hostname Master

hostname即为主机名,其它节点分别改为Datanode1与Datanode2,并用 hostname 主机名使之马上生效

4.2.2 设置IP地址

[root@Master ~]# vi /etc/sysconfig/network-scripts/ifcfg-eth0

# Intel Corporation 82545EM Gigabit Ethernet Controller (Copper)

DEVICE=eth0

BOOTPROTO=none

BROADCAST=10.100.100.255

HWADDR=00:0C:29:E1:D9:FF

IPADDR=10.100.100.178

IPV6INIT=yes

IPV6_AUTOCONF=yes

NETMASK=255.255.255.0

NETWORK=10.100.100.0

ONBOOT=yes

GATEWAY=10.100.100.1

TYPE=Ethernet

PEERDNS=yes

USERCTL=no

IPADDR=10.100.100.178,此处即为IP地址,分别将另两个节点的IP设置为179与180

重启网络配置,使IP修改生效

[root@Master ~]# /etc/init.d/network restart

4.2.3 配置hosts

[root@Master ~]# vi /etc/hosts

# Do not remove the following line, or various programs

# that require network functionality will fail.

127.0.0.1 localhost

::1 localhost6.localdomain6 localhost6

10.100.100.178 Master

10.100.100.179 Datanode1

10.100.100.180 Datanode2

配置后分别在节点ping另两个节点的主机名,必须要是通的

4.2.4 配置SSH互信

前提是三台虚拟机都已经启动,且能互相ping通了

分别使用GRID用户登录每台机器执行生成密钥命令(每个节点都要执行)

[grid@Master ~]$ ssh-keygen -t rsa

Generating public/private rsa key pair.

Enter file in which to save the key (/home/grid/.ssh/id_rsa):

Created directory '/home/grid/.ssh'.

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /home/grid/.ssh/id_rsa.

Your public key has been saved in /home/grid/.ssh/id_rsa.pub.

The key fingerprint is:

ed:58:a8:00:ea:c1:9a:71:a5:4b:ea:f0:67:9d:39:17 grid@Master

把各个节点的authorized_keys的内容互相拷贝加入到对方的此文件中,然后就可以免密码彼此ssh连入

[grid@Master ~]$ cd .ssh

[grid@Master .ssh]$ ls -l

total 8

-rw------- 1 grid grid 1671 Oct 9 22:43 id_rsa

-rw-r--r-- 1 grid grid 393 Oct 9 22:43 id_rsa.pub

[grid@Master .ssh]$ cp id_rsa.pub authorized_keys

[grid@Master .ssh]$ ssh Datanode1 cat ~/.ssh/id_rsa.pub && ssh Datanode2 cat ~/.ssh/id_rsa.pub

The authenticity of host 'datanode1 (10.100.100.179)' can't be established.

RSA key fingerprint is c1:b8:84:4d:06:74:50:d9:97:c3:ff:10:ca:26:94:e0.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added 'datanode1,10.100.100.179' (RSA) to the list of known hosts.

grid@datanode1's password:

ssh-rsa AAAAB3NzaC1yc2EAAAABIwAAAQEAtZ9eSe3ZjWIcAesLyrXwjhwTnfTC6Fh+49kvCK4UbA6zy4ra4dT4hsu2KfqErIBgBDaEvPxrKnuGkFJpS7X48ums3U2cM54RaQ/ZGjHF+iNDuiu6t5Dn6Etfi03qiqwSFQKm/d2aJu1glK+aNGgYAAaRNrH9usx91PXnn3naqdlKvW9CKNzxlTF84C7pdqI+NOBPxJEtX0XWNdnF22T6RBEwEagv/oHqP3OsozGJXGpQMHT99qPs+R+Zj58VeAVzmuEW9LF/uGl0Vjdoc79uSThgSo3JYbiGJ3fsz/7i2LI4lWrq2azeFKEnBm5n6EvWCMgQNJZ17ANt4qtCWQkgKw== grid@Datanode1

The authenticity of host 'datanode2 (10.100.100.180)' can't be established.

RSA key fingerprint is c1:b8:84:4d:06:74:50:d9:97:c3:ff:10:ca:26:94:e0.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added 'datanode2,10.100.100.180' (RSA) to the list of known hosts.

grid@datanode2's password:

ssh-rsa AAAAB3NzaC1yc2EAAAABIwAAAQEA0J2iEsi94oTZVMM7GMBUmv9Obfz65khrk7rRAfObnsGNEYiqwv5JCFZhkN3I4uL7be65vMd8XXpLCVOrwwY4LYaA8NcGLeEWq0bXXFE6V0xeHh/iBWECsopXkmKUEgX7euccXYH/GhFgQCvJ8WJREPUj3aRwfamPL8+V5Tj1USULY7k1/0lFUQzCs2DxjAfDdf+GN/ikXqjbUC5wkwzmJxxVfUGe1R/H9YKGRbgt7XoZ3AvJ7zJBshtm48sIS2MUWt0C0qJkQSJEu6NgQyFb0HGWUp9AkM8p0aGm4vftoA01xCcSUfJM06j2JHL+kxnEMy3V3g3VzxpVa/ER1eSsow== grid@Datanode2

把三个节点的密钥都拷到authorized_keys中

[grid@Master .ssh]$ cat authorized_keys

ssh-rsa AAAAB3NzaC1yc2EAAAABIwAAAQEA2qJKG/szmpICXbOmw6WJWYgl7kNMtgdAn5dUh1G8MFebdCDZtCk/a/0euEEuRzDarsaHP6DDGrWBsel6cCyyRZe7N1aAL8JGz7pwhDeQJu7vMlS8N9DLKJMiT6z9z8Y6FiSR4ZAVvQVpFXManV7Ktg7nCxf4dCjpWhEYMW9CUO9Au5eh9HpAwvlXxk0ATI2Thce4oC0K4quXk3HFiT8KVmYr99jgiZSuayEM/Rf9m5YYBN91r6lfCnid6iQTitJnmn+KOA/Hayro/LdgcuQKS9h4a4+sM3SD/79i3PDdKTdaabPuiV99XeM0QNF4U0pPS/7+IvRsUnigTfoMU+gg/Q== grid@Master

ssh-rsa AAAAB3NzaC1yc2EAAAABIwAAAQEAtZ9eSe3ZjWIcAesLyrXwjhwTnfTC6Fh+49kvCK4UbA6zy4ra4dT4hsu2KfqErIBgBDaEvPxrKnuGkFJpS7X48ums3U2cM54RaQ/ZGjHF+iNDuiu6t5Dn6Etfi03qiqwSFQKm/d2aJu1glK+aNGgYAAaRNrH9usx91PXnn3naqdlKvW9CKNzxlTF84C7pdqI+NOBPxJEtX0XWNdnF22T6RBEwEagv/oHqP3OsozGJXGpQMHT99qPs+R+Zj58VeAVzmuEW9LF/uGl0Vjdoc79uSThgSo3JYbiGJ3fsz/7i2LI4lWrq2azeFKEnBm5n6EvWCMgQNJZ17ANt4qtCWQkgKw== grid@Datanode1

ssh-rsa AAAAB3NzaC1yc2EAAAABIwAAAQEA0J2iEsi94oTZVMM7GMBUmv9Obfz65khrk7rRAfObnsGNEYiqwv5JCFZhkN3I4uL7be65vMd8XXpLCVOrwwY4LYaA8NcGLeEWq0bXXFE6V0xeHh/iBWECsopXkmKUEgX7euccXYH/GhFgQCvJ8WJREPUj3aRwfamPL8+V5Tj1USULY7k1/0lFUQzCs2DxjAfDdf+GN/ikXqjbUC5wkwzmJxxVfUGe1R/H9YKGRbgt7XoZ3AvJ7zJBshtm48sIS2MUWt0C0qJkQSJEu6NgQyFb0HGWUp9AkM8p0aGm4vftoA01xCcSUfJM06j2JHL+kxnEMy3V3g3VzxpVa/ER1eSsow== grid@Datanode2

分别将该文件拷到另两个节点并验证SSH互信是否成功

[grid@Master .ssh]$ scp authorized_keys grid@Datanode1:~/.ssh/

grid@datanode1's password:

authorized_keys 100% 1185 1.2KB/s 00:00

[grid@Master .ssh]$ scp authorized_keys grid@Datanode2:~/.ssh/

grid@datanode2's password:

authorized_keys 100% 1185 1.2KB/s 00:00

[grid@Master .ssh]$ ssh Datanode1

[grid@Datanode1 ~]$ ssh Datanode2

The authenticity of host 'datanode2 (10.100.100.180)' can't be established.

RSA key fingerprint is c1:b8:84:4d:06:74:50:d9:97:c3:ff:10:ca:26:94:e0.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added 'datanode2,10.100.100.180' (RSA) to the list of known hosts.

确认每个节点都能免密码 ssh 登录到另两个节点,这样即完成了SSH互信设置

5 配置Hadoop集群 5.1 下载hadoop

下载地址为:

http://mirror.bit.edu.cn/apache/hadoop/common/hadoop-0.20.2/hadoop-0.20.2.tar.gz

我这里直接在服务器上用wget进行了下载,也可以下载完后上传到节点

[root@Master ~]# wget http://mirror.bit.edu.cn/apache/hadoop/common/hadoop-0.20.2/hadoop-0.20.2.tar.gz

--2012-10-09 23:18:20-- http://mirror.bit.edu.cn/apache/hadoop/common/hadoop-0.20.2/hadoop-0.20.2.tar.gz

Resolving mirror.bit.edu.cn... 219.143.204.117

Connecting to mirror.bit.edu.cn|219.143.204.117|:80... connected.

HTTP request sent, awaiting response... 200 OK

Length: 44575568 (43M) [application/octet-stream]

Saving to: `hadoop-0.20.2.tar.gz'

100%[===================================================================================================================>] 44,575,568 102K/s in 7m 58s

2012-10-09 23:26:18 (91.1 KB/s) - `hadoop-0.20.2.tar.gz' saved [44575568/44575568]

[root@Master ~]# ls

anaconda-ks.cfg Desktop hadoop-0.20.2.tar.gz install.log install.log.syslog

[root@Master ~]# mv hadoop-0.20.2.tar.gz /home/grid

[root@Master ~]# su - grid

[grid@Master ~]$ ls

hadoop-0.20.2.tar.gz

[grid@Master ~]$ tar zxvf hadoop-0.20.2.tar.gz

hadoop-0.20.2/

hadoop-0.20.2/bin/

hadoop-0.20.2/c++/

hadoop-0.20.2/c++/Linux-amd64-64/

[grid@Master ~]$ ls

hadoop-0.20.2 hadoop-0.20.2.tar.gz

[grid@Master ~]$ cd hadoop-0.20.2

5.3 配置hadoop

红色字体为添加的配置内容

5.3.1 core-site.xml[grid@Master ~]$ cd hadoop-0.20.2

[grid@Master hadoop-0.20.2]$ cd conf

[grid@Master conf]$ ls

capacity-scheduler.xml core-site.xml hadoop-metrics.properties hdfs-site.xml mapred-site.xml slaves ssl-server.xml.example

configuration.xsl hadoop-env.sh hadoop-policy.xml log4j.properties masters ssl-client.xml.example

[grid@Master conf]$ vi core-site.xml

<?xml version="1.0"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!-- Put site-specific property overrides in this file. -->

<configuration>

<property>

<name>fs.default.name</name>

<value>hdfs://10.100.100.178:9000</value>

</property>

</configuration>

5.3.2 mapred-site.xml

[grid@Master conf]$ vi mapred-site.xml

<?xml version="1.0"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!-- Put site-specific property overrides in this file. -->

<configuration>

<property>

<name>mapred.job.tracker</name>

<value>http://10.100.100.178:9001</value>

</property>

</configuration>

5.3.3 core-site.xml

[grid@Master conf]$ vi core-site.xml

<?xml version="1.0"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!-- Put site-specific property overrides in this file. -->

<configuration>

<property>

<name>fs.default.name</name>

<value>hdfs://10.100.100.178:9000</value>

</property>

</configuration>

5.3.4 hdfs-site.xml

[grid@Master conf]$ vi hdfs-site.xml

<?xml version="1.0"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!-- Put site-specific property overrides in this file. -->

<configuration>

<property>

<name>dfs.replication</name>

<value>2</value>

</property>

</configuration>

5.3.5 hadoop-env.sh

[grid@Master conf]$ vi hadoop-env.sh

# Set Hadoop-specific environment variables here.

# The only required environment variable is JAVA_HOME. All others are

# optional. When running a distributed configuration it is best to

# set JAVA_HOME in this file, so that it is correctly defined on

# remote nodes.

# The java implementation to use. Required.

export JAVA_HOME=/usr/java/jdk1.6.0_35

将环境变量java_home前面的#去掉,并将正确的 java_home配置好

5.3.6 masters[grid@Master conf]$ vi masters

Master

[grid@Master conf]$ vi slaves

Datanode1

Datanode2

5.4 向各节点复制hadoop目录

[grid@Master ~]$ scp -r hadoop-0.20.2 Datanode1:~/

SerialUtils.hh 100% 4525 4.4KB/s 00:00

StringUtils.hh 100% 2441 2.4KB/s 00:00

[grid@Master ~]$ scp -r hadoop-0.20.2 Datanode2:~/

SerialUtils.hh 100% 4525 4.4KB/s 00:00

StringUtils.hh 100% 2441 2.4KB/s 00:00

[grid@Master ~]$ cd hadoop-0.20.2

[grid@Master hadoop-0.20.2]$ ls

bin CHANGES.txt docs hadoop-0.20.2-examples.jar ivy librecordio README.txt

build.xml conf hadoop-0.20.2-ant.jar hadoop-0.20.2-test.jar ivy.xml LICENSE.txt src

c++ contrib hadoop-0.20.2-core.jar hadoop-0.20.2-tools.jar lib NOTICE.txt webapps

[grid@Master hadoop-0.20.2]$ cd bin

[grid@Master bin]$ ls

hadoop hadoop-daemon.sh rcc start-all.sh start-dfs.sh stop-all.sh stop-dfs.sh

hadoop-config.sh hadoop-daemons.sh slaves.sh start-balancer.sh start-mapred.sh stop-balancer.sh stop-mapred.sh

[grid@Master bin]$ hadoop namenode -format

-bash: hadoop: command not found

[grid@Master bin]$ ./hadoop namenode -format

12/10/09 23:58:38 INFO namenode.NameNode: STARTUP_MSG:

/************************************************************

STARTUP_MSG: Starting NameNode

STARTUP_MSG: host = Master/10.100.100.178

STARTUP_MSG: args = [-format]

STARTUP_MSG: version = 0.20.2

STARTUP_MSG: build = https://svn.apache.org/repos/asf/hadoop/common/branches/branch-0.20 -r 911707; compiled by 'chrisdo' on Fri Feb 19 08:07:34 UTC 2010

************************************************************/

12/10/09 23:58:39 INFO namenode.FSNamesystem: fsOwner=grid,grid,root

12/10/09 23:58:39 INFO namenode.FSNamesystem: supergroup=supergroup

12/10/09 23:58:39 INFO namenode.FSNamesystem: isPermissionEnabled=true

12/10/09 23:58:39 INFO common.Storage: Image file of size 94 saved in 0 seconds.

12/10/09 23:58:39 INFO common.Storage: Storage directory /tmp/hadoop-grid/dfs/name has been successfully formatted.

12/10/09 23:58:39 INFO namenode.NameNode: SHUTDOWN_MSG:

/************************************************************

SHUTDOWN_MSG: Shutting down NameNode at Master/10.100.100.178

************************************************************/

5.6 启动守护进程

[grid@Master bin]$ ls

hadoop hadoop-daemon.sh rcc start-all.sh start-dfs.sh stop-all.sh stop-dfs.sh

hadoop-config.sh hadoop-daemons.sh slaves.sh start-balancer.sh start-mapred.sh stop-balancer.sh stop-mapred.sh

[grid@Master bin]$ ./start-all.sh

starting namenode, logging to /home/grid/hadoop-0.20.2/bin/../logs/hadoop-grid-namenode-Master.out

Datanode1: starting datanode, logging to /home/grid/hadoop-0.20.2/bin/../logs/hadoop-grid-datanode-Datanode1.out

Datanode2: starting datanode, logging to /home/grid/hadoop-0.20.2/bin/../logs/hadoop-grid-datanode-Datanode2.out

The authenticity of host 'master (10.100.100.178)' can't be established.

RSA key fingerprint is c1:b8:84:4d:06:74:50:d9:97:c3:ff:10:ca:26:94:e0.

Are you sure you want to continue connecting (yes/no)? yes

Master: Warning: Permanently added 'master,10.100.100.178' (RSA) to the list of known hosts.

Master: starting secondarynamenode, logging to /home/grid/hadoop-0.20.2/bin/../logs/hadoop-grid-secondarynamenode-Master.out

starting jobtracker, logging to /home/grid/hadoop-0.20.2/bin/../logs/hadoop-grid-jobtracker-Master.out

Datanode1: starting tasktracker, logging to /home/grid/hadoop-0.20.2/bin/../logs/hadoop-grid-tasktracker-Datanode1.out

Datanode2: starting tasktracker, logging to /home/grid/hadoop-0.20.2/bin/../logs/hadoop-grid-tasktracker-Datanode2.out

5.7 检测守护进程启动情况

masters节点

Datanode2

[grid@Master conf]$ jps

19648 Jps

5736 NameNode

5952 JobTracker

5888 SecondaryNameNode

slaves 节点

[grid@Datanode1 ~]$ jps

10732 Jps

5811 TaskTracker

5716 DataNode

[grid@Datanode2 ~]$ jps

5726 DataNode

5778 TaskTracker

10688 Jps

[grid@Datanode2 ~]$

至此,hadoop完整分布式模式安装完成。

如果觉得我的文章对您有用,请点赞。您的支持将鼓励我继续创作!

赞0作者其他文章

评论 1 · 赞 2

评论 0 · 赞 4

评论 0 · 赞 0

评论 0 · 赞 2

评论 0 · 赞 0

添加新评论0 条评论