一文搞懂 Minikube 底层原理

随着容器技术的井喷式发展及落地,通常情况下,我们 将 Kubernetes 描述为“将 Linux 容器集群作为单个系统进行管理,以加速开发并简化维护”。与此同时,企业应用服务进行容器化改造时避免不了学习和使用 Kubernetes 。然而 能够 在环境中完整部署一整套多节点的 Kubernetes 集群,对于刚接触这块体系的 Devops 人员来说确实有一定的难度... ...

何为 Minikube ?

Kubernetes 是一个用于 Docker 容器的开源编排系统,基于 Go 语言开发。其能够 处理计算集群中节点的调度并主动管理工作负载以确保它们的状态与用户声明的意图相匹配。然而,与此同时 ,Minikube 被详细描述为一个开源的“本地 Kubernetes 引擎”,其可以 在 macOS、Linux 以及 Windows 平台上实现本地化 Kubernetes 集群。 Minikube 与 Kubeadm 类似,作为 Kubernetes 官方所推荐的最佳协同工具, 其目标是成为本地 Kubernetes 应用程序开发的工具,并支持所有适合的 Kubernetes 功能。

Minikube 主要基于运行一个单节点 Kubernetes 集群,以便支持在本地机器上的 VM 内进行开发。它支持虚拟机驱动程序,如 VirtualBox、HyperV、KVM2。由于 Minikube 是 Kubernetes 世界中相对成熟的解决方案,支持的功能列表非常令人印象深刻。这些功能是负载均衡器、多集群、节点端口、持久卷、入口、仪表板或容器运行时。

基于 Minikube 开源工具,使得开发、运维人员及 DevOps 工程师能够快速在本地搭建 Kubernetes 单节点集群环境,毕竟, Mi niku be 对软硬件资源没有太高的要求,方便技术人员学习与实践,以及进行日常的项目开发。

Minikube 能够做什么?

正如上述章节所述,Minikube 是一个开源实用程序,可用于在本地机器上运行 Kubernetes 。它创建一个包含在虚拟机 (VM) 中的单节点集群。该集群可让我们模拟 Kubernetes 操作,而无需浪费 时间和资源去创建 成熟的 K8s 所需的。因此,Minikube 通常被描述为通过在本地管理集群来获得一些 Kubernetes 实践经验的方式。 除此之外,其还可以用于日常项目开发。

基于其产品定位,Minikube 的主要目标是成为本地 Kubernetes 应用程序开发的 最佳工具,并支持所有适合的 Kubernetes 功能。接下来,我们来了解一下 Minikube 所支持的功能特性。

Minikube 运行 Kubernetes 的最新稳定版本,支持标准 Kubernetes 功能,例如:

1、LoadBalancer - 使用 Minikube 隧道

2、多集群 - 使用 minikube start -p

3、NodePorts - 使用 Minikube 服务

4、持久卷

5、入口

6、仪表板 - Minikube 仪表板

7、容器运行时 - minikube start --container-runtime

8、通过命令行标志配置 Apiserver 和 Kubelet 选项

9、支持常见的 CI 环境

除了上述所述,其同时也对开发人员提供友好的功能,具体如下所示:

1、插件 - 开发人员共享在 Minikube 上运行服务的配置的市场

2、NVIDIA GPU 支持 - 用于机器学习

3、文件系统挂载等

针对 Minikube 的功能特性,更多信息请查看:https://minikube.sigs.k8s.io

Minikube 技术栈

基于 Minikube 的相关特性,我们从操作系统(OS)、CPU 架构、程序管理技术(Hypervisor tech)、容器运行时(CRI)以及容器网络接口插件(CNIs)等多方位角度简要描绘 Minikube 所支持的技术运行栈形态,具体如下图所示:

Minikube 架构解析

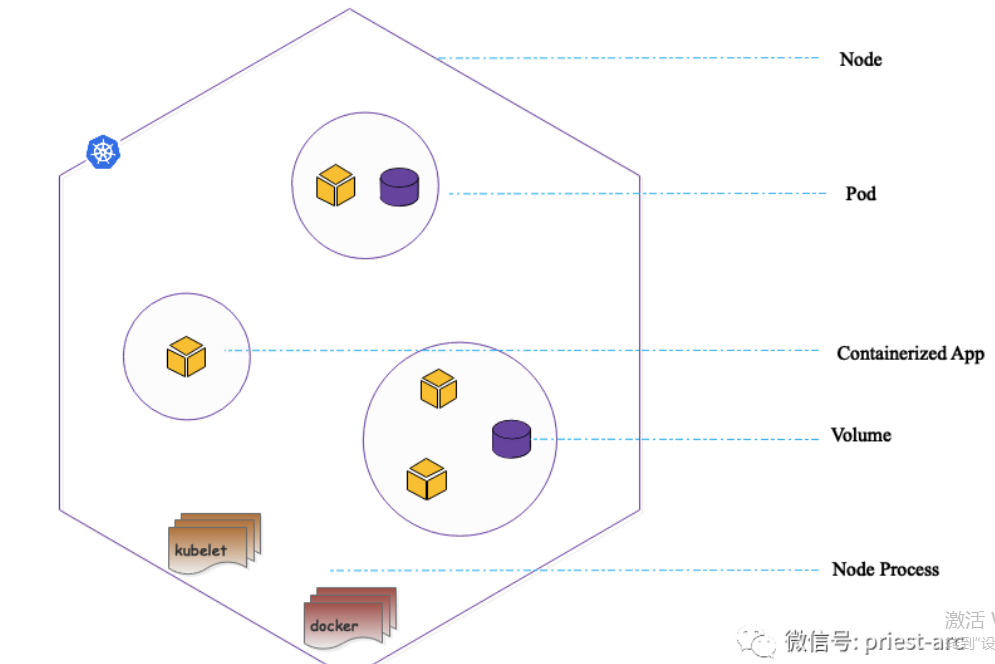

Minikube 基于本地虚拟机环境部署 Kubernetes 集群,其基本架构参考示意图,如下图所示:

如上图架构示意图所述,Minikube 组件启动流程主要涉及以下步骤,具体如下所示:

1、下载 .iso 文件以能够使得本地环境处于可用状态

2、从下载的 .iso 文件中提取 boot2docker.iso 镜像文件

3、创建用于 SSH 目的的动态证书

4、使用指定的配置创建 VirtualBox VM 文件

5、设置存储以挂载 boot2docker.iso 文件

6、设置网络相关配置(IP、DHCP 等)以及 在 VM 内设置 SSH 等

7、启动虚拟机

8、设置 /etc/hostname、 /etc/hosts 以及 设置 systemd 相关文件以使得 Docker 容器引擎能够正常启动

9、准备 Kubernetes 和 Docker 等相关基础环境配置

10、下载所有相关的 Kubernetes 文件 - kubelet、kubeadm 等

11、为 Kubernetes 所需的不同包拉取 Docker 镜像 并 启动不同的服务,例如,分布式存储系统 Etcd、调度程序 Scheduler、控制器 Controller Manager 以及 Api Server 等等

正如之前所述,Minikube 基于 Go 语言开发,构建于 Docker 的 libmachine,利用其驱动模型来创建、管理本地运行的虚拟机,并与其进行交互。在之前的 Kubernetes 1.2-1.3 版本,Minikube 架构涉及以下 2 部分,具体:

1、Localkube

为了能够有效地运行和管理 Kubernetes 的组件,Minikube 中使用了 Spread 的 Localkube。Localkube 是一个独立的 Go 语言二进制包,包含了 Kubernetes 的所有主要组件,并运行在不同的 goroutine 中。其源码库地址:https://github.com/redspread/localkube 。从其 GitHub 显示,在 2016 年 4 月后再未更新,以及最新的 Minikube 1.24.0 版本的源码包中暂未包含 Localkube 相关信息。

Minikube v0.4.0

Minikube v1.24.0

2、libmachine

Minikube 为了支持 MacOS 和 Windows,内部使用 libmachine 来创建或销毁虚拟机,可以理解为虚拟机驱动。对于 Linux,由于集群可以直接在本地运行,避免设置虚拟机。

接下来,我们简要分析一下核心的工作流原理,具体如下:

在整个项目框架中, cmd 目录下是 Localkube (当前版本已移除)和 Minikube 程序的入口。 cmd /minikube/cmd/* 目录 下为所有 M inikube 子命令的实现,每个文件对应一个子命令。

/*

Copyright 2016 The Kubernetes Authors All rights reserved.

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License

.*/

package cmd

import (

"context"

"encoding/json"

...

"strconv"

"strings"

"github.com/Delta456/box-cli-maker/v2"

"github.com/blang/semver/v4"

"github.com/docker/machine/libmachine/ssh"

"github.com/google/go-containerregistry/pkg/authn"

"github.com/google/go-containerregistry/pkg/name"

"github.com/google/go-containerregistry/pkg/v1/remote"

"github.com/pkg/errors"

"github.com/shirou/gopsutil/v3/cpu"

gopshost "github.com/shirou/gopsutil/v3/host"

"github.com/spf13/cobra"

"github.com/spf13/viper"

"k8s.io/klog/v2"

cmdcfg"k8s.io/minikube/cmd/minikube/cmd/config"

...

"k8s.io/minikube/pkg/minikube/style"

pkgtrace "k8s.io/minikube/pkg/trace"

"k8s.io/minikube/pkg/minikube/registry"

"k8s.io/minikube/pkg/minikube/translate"

"k8s.io/minikube/pkg/util"

"k8s.io/minikube/pkg/version"

)

var (

registryMirror []string

insecureRegistry []string

apiServerNames []string

apiServerIPs []net.IP

hostRe = regexp.MustCompile(`^[^-][\\w\\.-]+$`)

)

func init() {

initMinikubeFlags()

initKubernetesFlags()

initDriverFlags()

initNetworkingFlags()

if err := viper.BindPFlags(startCmd.Flags()); err != nil {

exit.Error(reason.InternalBindFlags, "unable to bind flags", err)

}

}

// startCmd represents the start command

var startCmd = &cobra.Command{

Use: "start",

Short: "Starts a local Kubernetes cluster",

Long: "Starts a local Kubernetes cluster",

Run: runStart,

}

// platform generates a user-readable platform message

func platform() string {

var s strings.Builder

// Show the distro version if possible

hi, err := gopshost.Info()

if err == nil {

s.WriteString(fmt.Sprintf("%s %s", strings.Title(hi.Platform), hi.PlatformVersion)) klog.Infof("hostinfo: %+v", hi)

} else {

klog.Warningf("gopshost.Info returned error: %v", err)

s.WriteString(runtime.GOOS)

}

vsys, vrole, err := gopshost.Virtualization()

if err != nil {

klog.Warningf("gopshost.Virtualization returned error: %v", err)

} else {

klog.Infof("virtualization: %s %s", vsys, vrole)

}

// This environment is exotic, let's output a bit more.

if vrole == "guest" || runtime.GOARCH != "amd64" {

if vrole == "guest" && vsys != "" {

s.WriteString(fmt.Sprintf(" (%s/%s)", vsys, runtime.GOARCH))

} else {

s.WriteString(fmt.Sprintf(" (%s)", runtime.GOARCH))

}

} return s.String()}

// runStart handles the executes the flow of "minikube start"

func runStart(cmd *cobra.Command, args []string) {

register.SetEventLogPath(localpath.EventLog(ClusterFlagValue()))

ctx := context.Background()

out.SetJSON(outputFormat == "json")

if err := pkgtrace.Initialize(viper.GetString(trace)); err != nil {

exit.Message(reason.Usage, "error initializing tracing: {{.Error}}", out.V{"Error": err.Error()})

}

defer pkgtrace.Cleanup()

displayVersion(version.GetVersion())

go download.CleanUpOlderPreloads()

// No need to do the update check if no one is going to see it

if !viper.GetBool(interactive) || !viper.GetBool(dryRun) {

// Avoid blocking execution on optional HTTP fetches

go notify.MaybePrintUpdateTextFromGithub()

}

displayEnviron(os.Environ())

if viper.GetBool(force) {

out.WarningT("minikube skips various validations when --force is supplied; this may lead to unexpected behavior")

}

// if --registry-mirror specified when run minikube start,

// take arg precedence over MINIKUBE_REGISTRY_MIRROR

// actually this is a hack, because viper 1.0.0 can assign env to variable if StringSliceVar

// and i can't update it to 1.4.0, it affects too much code

// other types (like String, Bool) of flag works, so imageRepository, imageMirrorCountry

// can be configured as MINIKUBE_IMAGE_REPOSITORY and IMAGE_MIRROR_COUNTRY

// this should be updated to documentation

if len(registryMirror) == 0 {

registryMirror = viper.GetStringSlice("registry-mirror")

}

if !config.ProfileNameValid(ClusterFlagValue()) {

out.WarningT("Profile name '{{.name}}' is not valid", out.V{"name": ClusterFlagValue()})

exit.Message(reason.Usage, "Only alphanumeric and dashes '-' are permitted. Minimum 2 characters, starting with alphanumeric.")

}

existing, err := config.Load(ClusterFlagValue())

if err != nil && !config.IsNotExist(err) {

kind := reason.HostConfigLoad

if config.IsPermissionDenied(err) {

kind = reason.HostHomePermission

}

exit.Message(kind, "Unable to load config: {{.error}}", out.V{"error": err})

}

if existing != nil {

upgradeExistingConfig(cmd, existing)

} else {

validateProfileName()

}

validateSpecifiedDriver(existing)

validateKubernetesVersion(existing)

ds, alts, specified := selectDriver(existing)

if cmd.Flag(kicBaseImage).Changed {

if !isBaseImageApplicable(ds.Name) {

exit.Message(reason.Usage,

"flag --{{.imgFlag}} is not available for driver '{{.driver}}'. Did you mean to use '{{.docker}}' or '{{.podman}}' driver instead?\\n"+

"Please use --{{.isoFlag}} flag to configure VM based drivers",

out.V{

"imgFlag": kicBaseImage,

"driver": ds.Name,

"docker": registry.Docker,

"podman": registry.Podman,

"isoFlag": isoURL,

},

)

}

}

starter, err := provisionWithDriver(cmd, ds, existing)

if err != nil {

node.ExitIfFatal(err)

machine.MaybeDisplayAdvice(err, ds.Name)

if specified {

// If the user specified a driver, don't fallback to anything else

exitGuestProvision(err)

} else {

success := false

// Walk down the rest of the options

for _, alt := range alts {

// Skip non-default drivers

if !alt.Default {

continue

}

out.WarningT("Startup with {{.old_driver}} driver failed, trying with alternate driver {{.new_driver}}: {{.error}}", out.V{"old_driver": ds.Name, "new_driver": alt.Name, "error": err})

ds = alt

// Delete the existing cluster and try again with the next driver on the list

profile, err := config.LoadProfile(ClusterFlagValue())

if err != nil {

klog.Warningf("%s profile does not exist, trying anyways.", ClusterFlagValue())

}

err = deleteProfile(ctx, profile)

if err != nil {

out.WarningT("Failed to delete cluster {{.name}}, proceeding with retry anyway.", out.V{"name": ClusterFlagValue()})

}

starter, err = provisionWithDriver(cmd, ds, existing)

if err != nil {

continue

} else {

// Success!

success = true

break

}

}

if !success {

exitGuestProvision(err)

}

}

}

if existing != nil && driver.IsKIC(existing.Driver) {

if viper.GetBool(createMount) {

old := ""

if len(existing.ContainerVolumeMounts) > 0 {

old = existing.ContainerVolumeMounts[0]

}

if mount := viper.GetString(mountString); old != mount {

exit.Message(reason.GuestMountConflict, "Sorry, {{.driver}} does not allow mounts to be changed after container creation (previous mount: '{{.old}}', new mount: '{{.new}})'", out.V{

"driver": existing.Driver,

"new": mount,

"old": old,

})

}

}

}

kubeconfig, err := startWithDriver(cmd, starter, existing)

if err != nil {

node.ExitIfFatal(err)

exit.Error(reason.GuestStart, "failed to start node", err)

}

if err := showKubectlInfo(kubeconfig, starter.Node.KubernetesVersion, starter.Cfg.Name); err != nil {

klog.Errorf("kubectl info: %v", err)

}

}

先来了解一下 cmd/minikube/cmd/start.go 源码,此程序是整个项目的入口,在我们执行 minikube start 命令之后,此段代码将会进行一次初始化并调用 runStart() 函数,该函数在整个启动过程中起着至关重要的作用。

在此代码块中,基于早期的版本,k8sBootstrapper 执行了三个方法:UpdateCluster、SetupCerts 和 StartCluster。这三个方法在 Bootstrapper 接口中定义。Bootstrapper 接口有两种实现,LocalkubeBootstrapper 和 KubeadmBootstrapper。但是,LocalkubeBootstrapper 的实现已经被声明为弃用,已在后续版本中移除,因此建议大家使用 KubeadmBootstrapper。

... ...

// SetupCerts sets up certificates within the cluster.

func (k *Bootstrapper) SetupCerts(k8s config.ClusterConfig, n config.Node) error {

return bootstrapper.SetupCerts(k.c, k8s, n)

}

// UpdateCluster updates the control plane with cluster-level info.

func (k *Bootstrapper) UpdateCluster(cfg config.ClusterConfig) error {

images, err := images.Kubeadm(cfg.KubernetesConfig.ImageRepository, cfg.KubernetesConfig.KubernetesVersion)

if err != nil {

return errors.Wrap(err, "kubeadm images")

}

r, err := cruntime.New(cruntime.Config{

Type: cfg.KubernetesConfig.ContainerRuntime,

Runner: k.c, Socket: cfg.KubernetesConfig.CRISocket,

})

if err != nil {

return errors.Wrap(err, "runtime")

}

if err := r.Preload(cfg); err != nil {

klog.Infof("preload failed, will try to load cached images: %v", err)

}

if cfg.KubernetesConfig.ShouldLoadCachedImages {

if err := machine.LoadCachedImages(&cfg, k.c, images, constants.ImageCacheDir, false); err != nil {

out.FailureT("Unable to load cached images: {{.error}}", out.V{"error": err})

}

}

cp, err := config.PrimaryControlPlane(&cfg)

if err != nil {

return errors.Wrap(err, "getting control plane")

}

err = k.UpdateNode(cfg, cp, r)

if err != nil {

return errors.Wrap(err, "updating control plane")

}

return ni

l}

// UpdateNode updates a node.

func (k *Bootstrapper) UpdateNode(cfg config.ClusterConfig, n config.Node, r cruntime.Manager) error {

kubeadmCfg, err := bsutil.GenerateKubeadmYAML(cfg, n, r)

if err != nil {

return errors.Wrap(err, "generating kubeadm cfg")

}

kubeletCfg, err := bsutil.NewKubeletConfig(cfg, n, r)

if err != nil {

return errors.Wrap(err, "generating kubelet config")

}

kubeletService, err := bsutil.NewKubeletService(cfg.KubernetesConfig)

if err != nil {

return errors.Wrap(err, "generating kubelet service")

}

klog.Infof("kubelet %s config:\\n%+v", kubeletCfg, cfg.KubernetesConfig)

sm := sysinit.New(k.c)

if err := bsutil.TransferBinaries(cfg.KubernetesConfig, k.c, sm); err != nil {

return errors.Wrap(err, "downloading binaries")

}

files := []assets.CopyableFile{

assets.NewMemoryAssetTarget(kubeletCfg, bsutil.KubeletSystemdConfFile, "0644"),

assets.NewMemoryAssetTarget(kubeletService, bsutil.KubeletServiceFile, "0644"),

}

if n.ControlPlane {

files = append(files, assets.NewMemoryAssetTarget(kubeadmCfg, bsutil.KubeadmYamlPath+".new", "0640"))

}

// Installs compatibility shims for non-systemd environments

kubeletPath := path.Join(vmpath.GuestPersistentDir, "binaries", cfg.KubernetesConfig.KubernetesVersion, "kubelet")

shims, err := sm.GenerateInitShim("kubelet", kubeletPath, bsutil.KubeletSystemdConfFile)

if err != nil {

return errors.Wrap(err, "shim")

}

files = append(files, shims...)

if err := bsutil.CopyFiles(k.c, files); err != nil {

return errors.Wrap(err, "copy")

}

cp, err := config.PrimaryControlPlane(&cfg)

if err != nil {

return errors.Wrap(err, "control plane")

}

if err := machine.AddHostAlias(k.c, constants.ControlPlaneAlias, net.ParseIP(cp.IP)); err != nil {

return errors.Wrap(err, "host alias")

}

return nil

}

... ...

针对 pkg/minikube/bootstrapper/kubeadm/kubeadm.go,UpdateCluster() 首先加载 Kubernetes 镜像缓存,然后将插件复制到虚拟机,然后启动 Kubelet 服务。

... ...

// SetupCerts gets the generated credentials required to talk to the APIServer.

func SetupCerts(cmd command.Runner, k8s config.ClusterConfig, n config.Node) error {

localPath := localpath.Profile(k8s.KubernetesConfig.ClusterName)

klog.Infof("Setting up %s for IP: %s\\n", localPath, n.IP)

ccs, regen, err := generateSharedCACerts()

if err != nil {

return errors.Wrap(err, "shared CA certs")

}

xfer, err := generateProfileCerts(k8s, n, ccs, regen)

if err != nil {

return errors.Wrap(err, "profile certs")

}

xfer = append(xfer, ccs.caCert)

xfer = append(xfer, ccs.caKey)

xfer = append(xfer, ccs.proxyCert)

xfer = append(xfer, ccs.proxyKey)

copyableFiles := []assets.CopyableFile{}

defer func() {

for _, f := range copyableFiles {

if err := f.Close(); err != nil {

klog.Warningf("error closing the file %s: %v", f.GetSourcePath(), err)

}

}

}()

for _, p := range xfer {

cert := filepath.Base(p)

perms := "0644"

if strings.HasSuffix(cert, ".key") {

perms = "0600"

}

certFile, err := assets.NewFileAsset(p, vmpath.GuestKubernetesCertsDir, cert, perms)

if err != nil {

return errors.Wrapf(err, "key asset %s", cert)

}

copyableFiles = append(copyableFiles, certFile)

}

caCerts, err := collectCACerts()

if err != nil {

return err

}

for src, dst := range caCerts {

certFile, err := assets.NewFileAsset(src, path.Dir(dst), path.Base(dst), "0644")

if err != nil {

return errors.Wrapf(err, "ca asset %s", src)

}

copyableFiles = append(copyableFiles, certFile)

}

kcs := &kubeconfig.Settings{

ClusterName: n.Name,

ClusterServerAddress: fmt.Sprintf("https://%s", net.JoinHostPort("localhost", fmt.Sprint(n.Port))),

ClientCertificate: path.Join(vmpath.GuestKubernetesCertsDir, "apiserver.crt"),

ClientKey: path.Join(vmpath.GuestKubernetesCertsDir, "apiserver.key"),

CertificateAuthority: path.Join(vmpath.GuestKubernetesCertsDir, "ca.crt"),

ExtensionContext: kubeconfig.NewExtension(),

ExtensionCluster: kubeconfig.NewExtension(),

KeepContext: false,

}

kubeCfg := api.NewConfig()

err = kubeconfig.PopulateFromSettings(kcs, kubeCfg)

if err != nil {

return errors.Wrap(err, "populating kubeconfig")

}

data, err := runtime.Encode(latest.Codec, kubeCfg)

if err != nil {

return errors.Wrap(err, "encoding kubeconfig")

}

if n.ControlPlane {

kubeCfgFile := assets.NewMemoryAsset(data, vmpath.GuestPersistentDir, "kubeconfig", "0644")

copyableFiles = append(copyableFiles, kubeCfgFile)

}

for _, f := range copyableFiles {

if err := cmd.Copy(f); err != nil {

return errors.Wrapf(err, "Copy %s", f.GetSourcePath())

}

}

if err := installCertSymlinks(cmd, caCerts); err != nil {

return errors.Wrapf(err, "certificate symlinks")

}

return nil

}

// CACerts has cert and key for CA (and Proxy)

type CACerts struct {

caCert string

caKey string

proxyCert string

proxyKey string

}

// generateSharedCACerts generates CA certs shared among profiles, but only if missing

func generateSharedCACerts() (CACerts, bool, error) {

regenProfileCerts := false

globalPath := localpath.MiniPath()

cc := CACerts{

caCert: localpath.CACert(),

caKey: filepath.Join(globalPath, "ca.key"),

proxyCert: filepath.Join(globalPath, "proxy-client-ca.crt"),

proxyKey: filepath.Join(globalPath, "proxy-client-ca.key"),

}

... ...

基于 pkg/minikube/bootstrapper/certs.go ,在此代码块中,SetUpCerts() 方法生成与 Kubernetes 相关的证书并将它们复制至对应虚拟机中的指定路径中。

package provision

import (

"bytes"

"fmt"

"text/template"

"time"

"github.com/docker/machine/libmachine/auth"

"github.com/docker/machine/libmachine/drivers"

"github.com/docker/machine/libmachine/engine"

"github.com/docker/machine/libmachine/provision"

"github.com/docker/machine/libmachine/provision/pkgaction"

"github.com/docker/machine/libmachine/swarm"

"github.com/spf13/viper"

"k8s.io/klog/v2"

"k8s.io/minikube/pkg/minikube/config"

"k8s.io/minikube/pkg/util/retry"

)

// BuildrootProvisioner provisions the custom system based on Buildroot

type BuildrootProvisioner struct {

provision.SystemdProvisioner

clusterName string

}

// NewBuildrootProvisioner creates a new BuildrootProvisioner

func NewBuildrootProvisioner(d drivers.Driver) provision.Provisioner {

return &BuildrootProvisioner{

NewSystemdProvisioner("buildroot", d),

viper.GetString(config.ProfileName),

}

}

func (p *BuildrootProvisioner) String() string {

return "buildroot"

}

// CompatibleWithHost checks if provisioner is compatible with host

func (p *BuildrootProvisioner) CompatibleWithHost() bool {

return p.OsReleaseInfo.ID == "buildroot"

}

// GenerateDockerOptions generates the *provision.DockerOptions for this provisioner

func (p *BuildrootProvisioner) GenerateDockerOptions(dockerPort int) (*provision.DockerOptions, error) {

var engineCfg bytes.Buffer

drvLabel := fmt.Sprintf("provider=%s", p.Driver.DriverName())

p.EngineOptions.Labels = append(p.EngineOptions.Labels, drvLabel)

noPivot := true

// Using pivot_root is not supported on fstype rootfs

if fstype, err := rootFileSystemType(p); err == nil {

klog.Infof("root file system type: %s", fstype)

noPivot = fstype == "rootfs"

}

engineConfigTmpl := `[Unit]

Description=Docker Application Container Engine

Documentation=https://docs.docker.com

After=network.target minikube-automount.service docker.socket

Requires= minikube-automount.service docker.socket

StartLimitBurst=3

StartLimitIntervalSec=60

[Service]

Type=notify

Restart=on-failure

`

if noPivot {

klog.Warning("Using fundamentally insecure --no-pivot option")

engineConfigTmpl += `

# DOCKER_RAMDISK disables pivot_root in Docker, using MS_MOVE instead.

Environment=DOCKER_RAMDISK=yes

`

}

engineConfigTmpl += `

{{range .EngineOptions.Env}}Environment={{.}}

{{end}}

# NOTE: default-ulimit=nofile is set to an arbitrary number for consistency with other

# container runtimes. If left unlimited, it may result in OOM issues with MySQL.

ExecStart=

ExecStart=/usr/bin/dockerd -H tcp://0.0.0.0:2376 -H unix:///var/run/docker.sock --default-ulimit=nofile=1048576:1048576 --tlsverify --tlscacert {{.AuthOptions.CaCertRemotePath}} --tlscert {{.AuthOptions.ServerCertRemotePath}} --tlskey {{.AuthOptions.ServerKeyRemotePath}} {{ range .EngineOptions.Labels }}--label {{.}} {{ end }}{{ range .EngineOptions.InsecureRegistry }}--insecure-registry {{.}} {{ end }}{{ range .EngineOptions.RegistryMirror }}--registry-mirror {{.}} {{ end }}{{ range .EngineOptions.ArbitraryFlags }}--{{.}} {{ end }

ExecReload=/bin/kill -s HUP \\$MAINPID

# Having non-zero Limit*s causes performance problems due to accounting overhead

# in the kernel. We recommend using cgroups to do container-local accounting.

LimitNOFILE=infinity

LimitNPROC=infinity

LimitCORE=infinity

# Uncomment TasksMax if your systemd version supports it.

# Only systemd 226 and above support this version.

TasksMax=infinity

TimeoutStartSec=0

# set delegate yes so that systemd does not reset the cgroups of docker containers

Delegate=yes

# kill only the docker process, not all processes in the cgroup

KillMode=process

... ...

// Package installs a package

func (p *BuildrootProvisioner) Package(name string, action pkgaction.PackageAction) error {

return nil

}

// Provision does the provisioning

func (p *BuildrootProvisioner) Provision(swarmOptions swarm.Options, authOptions auth.Options, engineOptions engine.Options) error {

p.SwarmOptions = swarmOptions

p.AuthOptions = authOptions

p.EngineOptions = engineOptions

klog.Infof("provisioning hostname %q", p.Driver.GetMachineName())

if err := p.SetHostname(p.Driver.GetMachineName()); err != nil {

return err

}

p.AuthOptions = setRemoteAuthOptions(p)

klog.Infof("set auth options %+v", p.AuthOptions)

klog.Infof("setting up certificates")

configAuth := func() error {

if err := configureAuth(p); err != nil {

klog.Warningf("configureAuth failed: %v", err)

return &retry.RetriableError{Err: err}

}

return nil

}

err := retry.Expo(configAuth, 100*time.Microsecond, 2*time.Minute)

if err != nil {

klog.Infof("Error configuring auth during provisioning %v", err)

return err

}

klog.Infof("setting minikube options for container-runtime")

if err := setContainerRuntimeOptions(p.clusterName, p); err != nil {

klog.Infof("Error setting container-runtime options during provisioning %v", err) return err

}

return nil

}

基于 pkg/provision/buildroot.go , 在此代码块中, configureAuth() 主要是生成 Doc ker 相 关的证书,并 将证书复制到虚拟机对应的路径中。p.GenerateDockerOptions() 生成 Docker 配置文件并启动 Docker。

以上为核心功能的源码剖析,大家若有兴趣,可直接去官网下载最新的源码包进行研究。结合上述核心组件的源码分析,我们可以对 Minikube 启动过程进行简单总结,具体如下:

1、通过 libmachine 启动虚拟机,生成 Docker 相关证书和配置文件,启动Docker 服务

2、生成 Kubernetes 相关的配置文件和插件,以及相关的证书,并将其复制到对应的虚拟机路径中

3、基于前面的配置文件,生成启动 Kubernetes 集群的启动脚本,然后,可以通过 Client 进行访问。

关于 Minikube 的部署安装,大家可参考文章:Kubernetes 构建工具浅析。以上为本文关于 Minikube 底层的简要概述,欢迎大家随时沟通,交流。

如果觉得我的文章对您有用,请点赞。您的支持将鼓励我继续创作!

赞2作者其他文章

评论 0 · 赞 0

评论 4 · 赞 2

评论 4 · 赞 1

评论 6 · 赞 1

评论 4 · 赞 3

添加新评论0 条评论